Podcast Episode Details

Generative AI tools like ChatGPT mimic the processes of the human brain on a less sophisticated and dynamic level than the actual human brain. In this article, we explore and why it may be harmful to do so. Listen to the podcast to find out about:

How generative AI tools like ChatGPT work (at a very high level)

How pattern recognition in the human mind leads to the personification of AI tools.

The potentially negative implications of personifying digital tools like ChatGPT

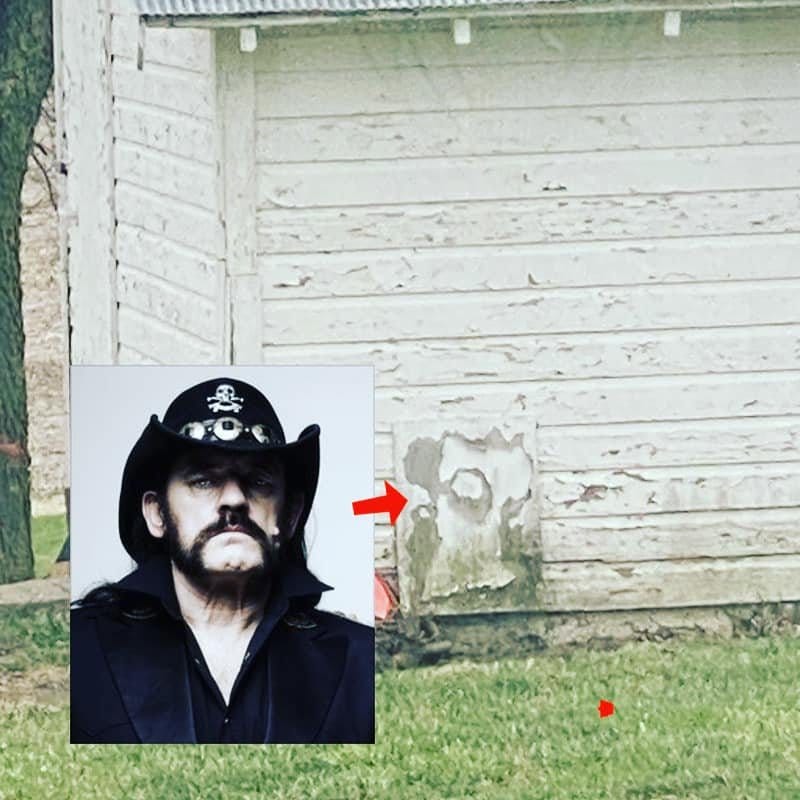

Finding Lemmy On My Shed

Several years ago, I noticed something curious on the side of my shed. The pattern of chipped and worn-away paint on the corner of one wall bore a striking resemblance to heavy metal icon, Lemmy Kilmister. That's weird, right?

Logically, I knew that my shed, a repurposed 100-year-old chicken coop on my family's farm, didn't really contain the essence of the deceased lead singer of one of the greatest metal bands: Motörhead.

That didn't stop me from giving a nod to ol' Lemmy as I rode by on the lawn mower, or walked past on the way to retrieve something from the shed.

When that part of the shed was in need of maintenance, I gave more than a moment's thought about how to complete repairs and still preserve "Shed Lemmy."

Recognizing Familiar Patterns

The human brain has evolved to recognize familiar patterns in things. It's called pareidolia, and it often takes the form of recognizing faces in headlights, power outlets, burnt toast, and even the corner of a shed.

How does this phenomenon of pattern recognition apply to ChatGPT and other generative AI tools that are taking the world by storm in 2023? It applies because people are recognizing patterns of humanity in these AI tools. Is that a good thing? Let's explore.

Being Kind to Robots

A recent post I saw on LinkedIn talked about how the poster would use words like "please" and "thank you" when interacting with generative AI tools. The reason given for this politeness was that decorum, politeness, and humanity are important in every interaction we make. If we dehumanize our interaction with AI, how will we treat the people we communicate with?

While I understand the intent and sentiment of treating our fellow humans with dignity and respect, there's one significant flaw. AI tools aren't humans, and at this point, they don't really even get that close.

The Necessary AI Disclaimer

This is the part where I state that everything here is subject to change due to the rapid acceleration of AI technology as it becomes available to the masses. Will there be a point where AI technology achieves a level of intelligence and/or sentience that makes it rival human life? Perhaps. Are we there yet? No. With that out of the way, please read on.

How Generative AI Works (sort of)

On the surface, it seems perfectly harmless to talk to an AI like it's a person, but there could be real negative implications of personifying technology tools.

To understand why there may be negative implications, it's important to have a basic grasp of how this AI technology retrieves and shares information. For an in-depth guide to these tools, I suggest reading Stephen Wolfram's post entitled "What Is ChatGPT Doing...and Why Does It Work?"

Did you get all that? Yeah, I didn't either, but at least we gave it a shot. Now let me share my very basic overview:

The neural networks found in tools like ChatGPT mimic the processes of the human brain on a much less sophisticated and dynamic level than the actual human brain.

The job of one part of the system is to interact with humans. Conversation is "learned" by the AI over time, through specific training and through live interaction with humans. Over time it gets better and better at answering questions and providing feedback.

Connected to that system is a system that internalizes data from a particular source — called a language model. In most cases, this is a gigantic dataset from the internet, but it wouldn't necessarily have to be. Corporations or institutions may be able to use this kind of technology to manage their own specific or proprietary knowledge-base. It's pretty cool.

Really, Really Powerful Autocorrect

The systems work with each other to interact with the user and provide responses based on a predictive outcome (think of autocorrect on your phone, but on a much more powerful level).

The most probable response, based on connections made within the neural network, gets output to the end-user — let's call him Steve — who then accepts it as being 100% correct without any fact-checking, and publishes it to his website.

Notice that I said the most probable response, but I didn't say the most correct response. Generative AI predicts what the most probable next word will be. Generally, that'll be the correct word, but not always.

Sorry, Steve.

Straight Outta Blade Runner?

Because of the way AI can interact with people, and because of the vast amounts of data it can access and analyze, people tend to make the mistake of elevating AI to human status (or higher).

"More human than human" - to steal a motto from the movie, "Blade Runner".

But they're not human. We're not yet dealing with the human-replicant androids found in "Blade Runner". Right now, generative AI tools are advanced computer programs that can sort through vast amounts of data. Their networks are far less sophisticated than the human mind.

Note: Don't forget my earlier disclaimer before reading on.

In other words: Not human. Not even really that close yet.

I'm Guilty

On my podcast, I'll occasionally use an AI-generated voice and pass it off as a talking robot. I do this for humor and to break up the monotony that can sometimes arise from a single-host podcast. My intent is never to make it seem like I am interacting with a real sentient intelligence. I do it for fun.

But even in fun, when we personify digital tools like ChatGPT, we impart upon them a level of authority that they don't necessarily have. Remember the pareidolia, or pattern recognition we talked about earlier?

In this setting, we're more likely to accept their output as fact, when they often surface incorrect information. This is even more true when your AI "friends" are coming from institutions of authority like Microsoft and Google.

The internet already has a significant problem with the spread of misinformation and disinformation. Un-checked usage of AI as an authoritative voice could only worsen the problem.

The Creep Factor

News stories started to pop up over the last week from journalists who used the new Bing search engine, which incorporates a more advanced version of Open AI's system than what ChatGPT currently uses. Reports of strange and unnerving interactions between users and the Bing interface began to surface.

In every instance that I saw, weird stuff happened because users were treating the system as a person. They were pushing it past its indended limits (at least as the tools currently exist).

They tried to ask it questions or provoke it as if it were human. Part of this is because the system has a chat interface. People are accustomed to chatting with other people — not interacting with machines.

Note: Microsoft has "fixed" this issue in the beta of the new Bing AI interface by limiting the number of chat messages per session to just 5, and total chat queries per day to 50. That limited number keeps stuff from getting too spicy, apparently.

If all that's not enough, distributors of AI tools just can't help themselves from giving their systems human — usually female — names. Though slightly different technologies, Alexa and Siri come to mind.

One of the first GPT-based generative AI writing tools, Jarvis, was named after the AI built by Tony Stark in Iron Man. It got renamed to Jasper after what was certainly a strongly worded letter from Disney. The new Bing AI interface was reportedly given the codename Sydney — though some reports state there's an evil version called Riley. I don't know what that's all about.

If it's named like a human, chats like a human, and confidently provides answers like a human, people will think it's a human.

AI Doesn't Have to Be Human to Be Awesome

At the end of the day, it's not human, and that's perfectly fine. AI has nearly limitless possibilities and we have only scratched the surface of what it will do.

Right now, it's a power tool for content creators that can improve speed and efficiency. It's great for generating ideas, for summarizing large amounts of text, for uncovering search keywords, for building social copy and meta descriptions, and for coming up with catchy titles.

Yes, in some cases it's even good for writing long-form content like emails and blog posts.

Just like any other power tool, some traditional practitioners will choose to do things the way they've always done them. Others will embrace AI and use it in all kinds of new and interesting ways. Most people will fall somewhere in the middle of those two extremes. Right now, I think all of that is just fine.

As long as the end result of content achieves its intended goals and resonates with the intended audience, does it really matter how it was created? That's the lesson we should be taking from AI right now — not that it's a creepy human analog.

Is generative AI "more human than human?" No. But it's pretty cool anyway.

Listen Up!

I have two rock & roll recommendations this week, and both are metal! Listener discretion applies as their are grown-up themes throughout both records.

White Zombie: Astro-Creep: 2000 – Songs of Love, Destruction and Other Synthetic Delusions of the Electric Head (1995)

The first is the 1995 release from White Zombie, which features the track “More Human Than Human,” taken from the motto used by the Tyrell Corporation in the movie “Blade Runner” (inspired by the Phillip K. Dick novel, “Do Androids Dream of Electric Sheep?”).

Motörhead: Overkill (1979)

To honor Lemmy Kilmister, with whom I opened this article by talking about seeing him on the wall of my shed, I felt it was only right to share one of the great Motörhead records. I chose “Overkill”, which is a really good heavy rock record and it contains a song called Metropolis. I don’t really know what the song is about and the lyrics don’t really clue me in, but the 1927 German silent film Metropolis was the first to feature an human-like artificial intelligence. Close enough for me!

Generative AI: More Human Than Human...?